Quality Assurance in a security and compliance platform is not just about testing screens or checking UI flows.

It is about validating something bigger.

Trust.

Because these platforms influence real decisions: security posture, audit readiness, control completion, evidence approval, remediation priorities, and reporting.

So here is a simple question.

If your platform says “Verified” or “Audit-ready”… Would you bet an audit on it?

If the answer is not a confident yes, QA has more to do than just checking UI flows.

Many organizations align their security programs with frameworks like the National Institute of Standards and Technology Cybersecurity Framework (CSF 2.0) and compliance requirements such as Cybersecurity Maturity Model Certification 2.0. In that context, QA is not only testing features. QA is validating that the system supports real-world readiness in a way that is accurate, secure, traceable, and usable.

In this two part blog series, I will explain the real QA mindset behind testing a security and compliance platform. In this first part, I will focus on why trust matters, what these platforms are built for, and what QA tests from governance to remediation.

Why this kind of platform is made?

Security and compliance work often becomes scattered.

Not because teams do not care, but because work lives everywhere.

- Policies exist, but enforcement is inconsistent

- Evidence is spread across drives, emails, screenshots, and tickets

- Teams do not know what “done” actually means for a control

- Gaps are known but do not turn into owned action

- Readiness gets measured only at audit time, which is too late

- There is no single source of truth for progress and accountability

This kind of platform exists to connect the full chain:

Controls (requirements) → Evidence (proof) → Gaps (what’s missing) → Remediation (how it gets fixed)

It turns compliance from a last-minute scramble into continuous readiness.

How this helps protect against exploitation threats?

A compliance platform does not block attackers like a firewall.

Its value is that it reduces the conditions attackers exploit, the weak points that stay hidden, ignored, or unowned.

Attackers often succeed when there is:

- Weak identity and access controls

- Missing MFA or excessive privileges

- Outdated policies and untracked changes

- Poor visibility and incomplete logging

- Unmanaged devices or remote sessions

- Unclear incident response responsibilities

- Gaps that stay open for months

These platforms reduce exploitation risk by ensuring:

- Gaps are visible and not buried in documents

- Proof is maintained through evidence lifecycle and verification

- Ownership is clear so someone is responsible for closing gaps

- Remediation is executed and not only documented

- Reporting is defensible because numbers match underlying truth

This is what turns compliance work into practical risk reduction.

QA focus area: Making compliance traceable and enforceable

If the system says a control is satisfied, we should be able to answer:

- Which requirement does it satisfy?

- What evidence proves it?

- Who verified it?

- When did they verify it?

That is traceable.

And if a user tries to bypass the workflow, for example marking work complete without proof or changing verification without authority, the system should block them.

That is enforceable.

This is why QA tests the platform as an accountability system, not just a UI.

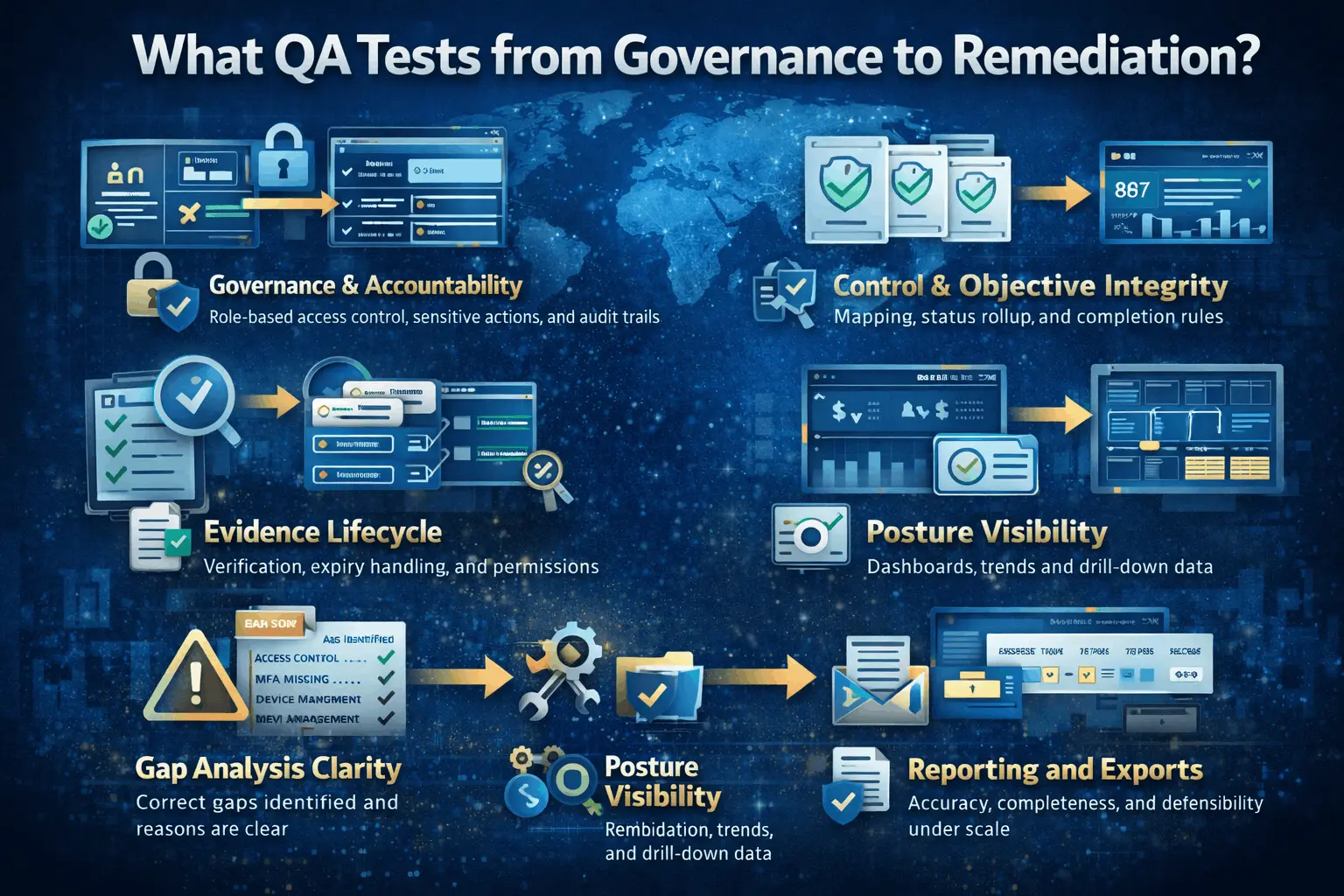

What QA tests from governance to remediation?

QA testing in a security and compliance platforms goes beyond verifying features. Each stages must be accurate, enforceable, and traceable to ensure the platform remains a trustworthy source of truth under audit and real world risk conditions.

1) Governance and accountability

We start by validating who can do what, because trust depends on strong boundaries.

We test:

- Role-based access control (RBAC): who can view, edit, approve, verify, export

- Sensitive actions: verify or unverify, delete evidence, change ownership, change status

- Audit trails: critical changes recorded with user, timestamp, and action

- Multi-tenant boundaries (if applicable): no cross-organization data access

Why it matters:

- Unauthorized edits can change compliance status

- Sensitive evidence exposure can become a security incident

- Audit trails are the difference between “we think” and “we can prove”

2) Control and objective integrity

Controls and objectives are the truth layer. If the rule engine is wrong, everything built on it becomes misleading.

We test:

- Correct mapping: objective to control to domain or grouping

- Status rollups: verified objectives roll up correctly to satisfied controls

- Completion rules: what is required for “verified” or “audit-ready”

- Edge cases: partial completion, missing required evidence, conflicting statuses

Why it matters:

- False “compliant” is worse than “not compliant”

- Trust breaks instantly when pages show different results

3) Evidence lifecycle

Evidence is the backbone of compliance. QA treats evidence like a workflow, not a file upload.

We test:

- Upload and attach evidence to the right requirement

- States: uploaded, under review, verified

- Expiry behaviour: expiring soon, expired, flags or reminders (if available)

- Permission enforcement: only authorised roles can verify or unverify

- File integrity: preview and download reliability, large file handling

Why it matters:

- Expired evidence can quietly invalidate controls

- Broken links show up at the worst time, during audits

4) Posture visibility (dashboards, trends, coverage)

Dashboards drive decisions quickly. That’s why they must also be correct.

We test:

- KPI accuracy: scores and counts match underlying data

- Trend correctness: progress updates accurately over time

- Drill-down trust: summary numbers lead to the exact backing dataset

- Filters: consistent behaviour across pages and exports

Why it matters:

- Incorrect metrics cause teams to prioritise the wrong work

5) Gap analysis clarity

Gap analysis is where teams understand what to fix and why.

We test:

- Correct identification of gaps

- No false gaps

- Clear reasons so users understand why something is missing

- Stable navigation so users do not lose context

Why it matters:

- Confusion slows remediation and leaves high-risk gaps open longer

6) Remediation and POA&M execution

This is where security improves. The platform must help convert gaps into owned action.

We test:

- Gap to remediation item creation (linked and traceable)

- Owner and due date enforcement

- Priority behaviour aligned with risk and impact logic

- Closure rules: resolved requires proof, not only a status change

- History supports handovers and review

Why it matters:

- “Closed” without proof is a false sense of security

7) Reporting and exports

Reports are where trust is tested. This is what gets shared with auditors and stakeholders.

We test:

- Dashboard versus report match

- Completeness (no missing controls/evidence due to pagination or filters)

- Correct scoping (right organization, assessment, time window)

- Reliability under scale (large datasets export consistently)

Why it matters:

- If report numbers do not match the UI, trust collapses instantly

Final Thoughts

QA in a security and compliance platform is not just about stability. It is about making the output trustworthy.

When QA is involved early, we validate the foundations before the platform becomes the source of high-impact decisions. That is what makes compliance traceable, enforceable, and dependable under audit pressure.

Read Part II next: QA Testing a Security & Compliance Platform: Trust, Defensibility, and the QA Mindset.