At Gurzu, we recently deployed a microservices architecture with Kubernetes for an important project. The application is the backbone for a very renowned smart ring company which is basically a IOT based device relying its foundations on GCP.

Kubernetes has become the leading platform for deploying, managing, and scaling containerized applications. When integrated with Google Cloud Platform (GCP), it offers a powerful and scalable environment for running modern applications.

In this article, we will discuss various aspects of deploying an application on Kubernetes in GCP.

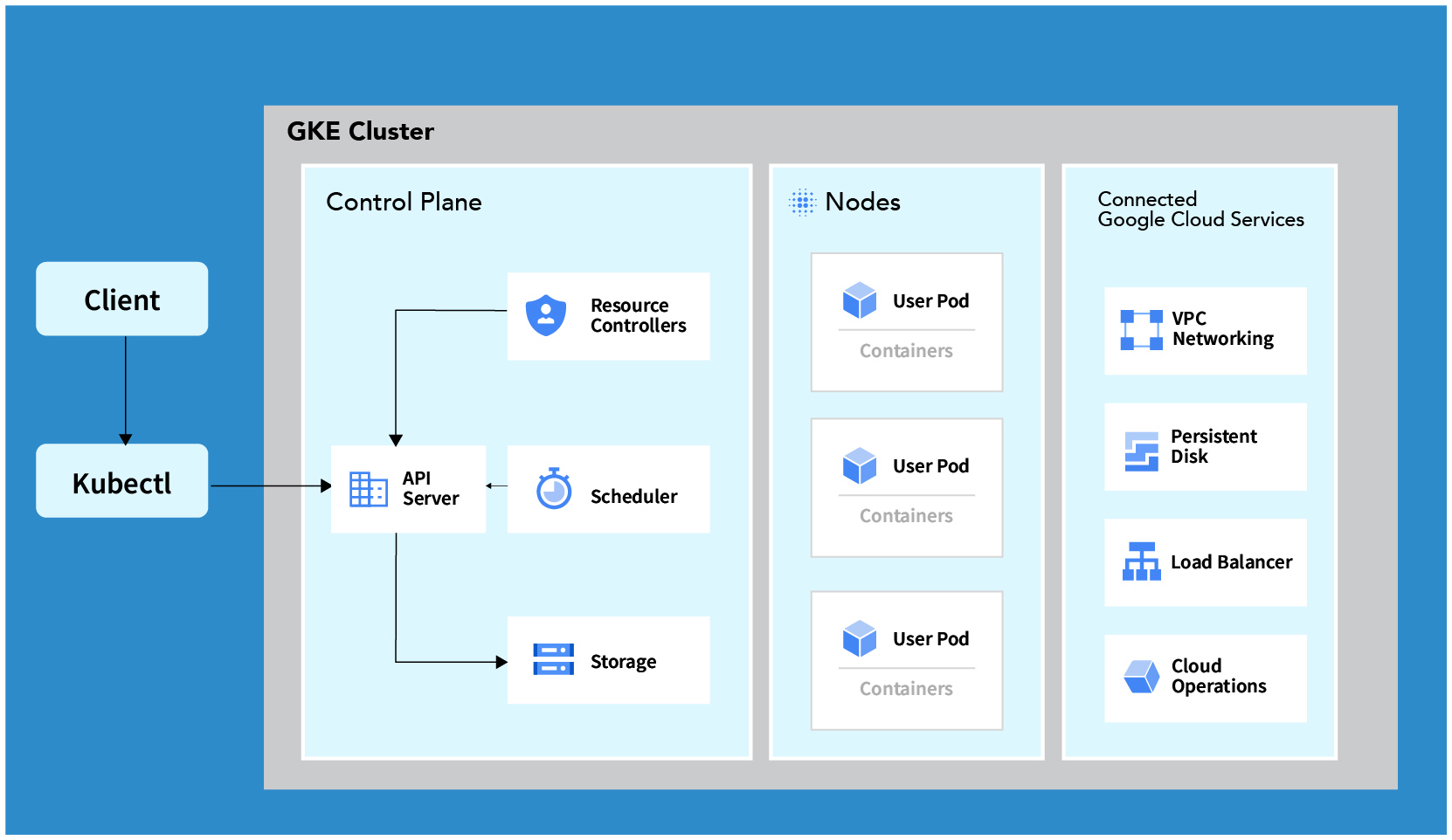

Architecture Overview

This architecture diagram outlines the key components involved in deploying an application on Kubernetes in GCP. These components can be broadly categorized into the following:

- Control Plane: The brain of the Kubernetes cluster, responsible for managing the overall state, scheduling, and scaling of applications.

- Nodes: The worker machines that run the application containers. Each node can host multiple pods.

- Pods: The smallest deployable units in Kubernetes, which can contain one or more containers.

- Networking: Ensures seamless communication between pods, services, and external clients.

- Storage: Provides persistent storage for application data, ensuring data durability and availability.

- Load Balancer: Distributes incoming traffic across multiple pods to ensure high availability and reliability.

-

Google Cloud Services: Integrated services such as Cloud Storage, Cloud SQL, and others that enhance the functionality of your application.

Setting Up the Kubernetes Cluster on GCP

The first step in deploying an application is to set up a Kubernetes cluster on GCP. This involves creating a GCP project, enabling the Kubernetes Engine API, and configuring the cluster settings. The cluster can be customized based on application’s requirements, including the number of nodes, machine types, and network configurations.

Microservice vs Monolithic application, which is the better choice? Read here –>

Containerizing Application

Before deploying an application on Kubernetes, it needs to be containerized. This involves creating a Docker image of an application. The Docker image encapsulates the application and its dependencies, ensuring consistency across different environments. Once the Docker image is created, it is pushed to a container registry like Google Container Registry (GCR), making it accessible to your Kubernetes cluster.

Deploying The Application

With the Kubernetes cluster ready and the application containerized, the next step is to deploy the application. This is done by defining a Kubernetes Deployment, which specifies the desired state of your application, including the number of replicas, container images, and resource limits. The Deployment ensures that the specified number of application instances (pods) are running and automatically handles scaling and updates.

Exposing The Application

To make the application accessible to users, it needs to be exposed to the internet. This is achieved by creating a Kubernetes Service. The Service acts as a stable endpoint for your application, routing traffic to the appropriate pods. It can be configured the Service to use a LoadBalancer, which automatically provides a cloud load balancer to distribute incoming traffic across pods.

Managing Storage and Networking

Applications often require persistent storage to store data that needs to survive pod restarts. Kubernetes provides PersistentVolumes and PersistentVolumeClaims to manage storage resources. PersistentVolumes represent physical storage resources, while PersistentVolumeClaims are requests for storage by users. By configuring these resources, it can ensure that applications have access to durable and scalable storage.

Networking in Kubernetes is designed to facilitate communication between pods, services, and external clients. Kubernetes Network Policies can be used to control traffic flow and enforce security rules, ensuring that only authorized communication is allowed.

Monitoring and Scaling

Once the application is deployed, it is crucial to monitor its performance and ensure it can handle varying loads. Google Cloud Operations (formerly Stackdriver) provides comprehensive monitoring and logging capabilities, allowing to track the health and performance of cluster and application.

Scaling is another critical aspect of managing applications on Kubernetes. The Horizontal Pod Autoscaler (HPA) automatically adjusts the number of pod replicas based on CPU or memory usage, ensuring that your application can handle increased traffic without manual intervention.

Conclusion

Deploying an application on Kubernetes in GCP involves a series of well-defined steps, from setting up the cluster to managing storage, networking, and scaling. By leveraging the powerful features of Kubernetes and the integrated services of GCP, we created a robust, scalable, and efficient environment for application. The architecture diagram serves as a visual guide to understand the interactions between different components and ensure a smooth deployment process.

.……..……..……..……..…….

Want to leverage the power of Kubernetes and GCP for your next project? We can help you! Book a free consulting call with Gurzu cloud expert and explore your options.